Concurrent programming

Introduction

Programming is undergoing a major transition right before our eyes. In the olden days, programs were executed sequentially by a relatively simple processor. That straightforward model has been upended by the computing industry’s pursuit of higher performance. Innovations in chip design require new programming paradigms.

For decades, the processor industry was in thrall to Moore’s law: an observation by Henry Moore, CEO of Intel, that the number of transistors on a processor doubled every two years. Another observation, Dennard scaling, stated that a chip’s power consumption would remain constant as the transistors became smaller and denser. Taken together, the two observations correctly predicted that performance per watt would increase rapidly. Between 1998 and 2002, processor speeds increased from 300MHz in a Pentium II to 3GHz in a Pentium 4. That’s ten times faster in four years. For programmers this was a good thing. Processor speeds increased so rapidly that upgrading hardware was the easiest way to improve performance.

Such rapid performance increases could not go on forever. Components had to become smaller and smaller so that more could be packed into the same space. Increasingly tiny components made it more and more difficult to dissipate heat and keep the electrons flowing correctly. Clock speeds increased in smaller and smaller increments until finally they increased no more. Manufacturers desperately sought ways to deliver the performance improvements that their customers had come to expect. With no easy way to further increase clock speeds, they came up with the wheeze of bundling multiple processors, or cores, on to the same chip. And thus the multi-core revolution was born.

In theory, a processor with two cores can do twice as much work in the same time as a processor with a single core. In reality, things are not so simple. Doubling the number of cores does not directly improve performance in the same way that doubling the clock speed does. A single-threaded program on a multi-core processor will utilise a single core and leave the others idle. The CPU cannot automatically spread the computation over multiple cores. We must shift to a new programming paradigm in which tasks execute concurrently on multiple cores.

There is no single, correct concurrency model. Some programming languages are based on a particular model, while other languages support multiple models. In this chapter, we’ll begin by defining some important terms. We’ll move on to some basic concurrency primitives provided by the operating system and their limitations. We’ll then study the interesting and contrasting concurrency models provided by JavaScript and Go.

Concurrency, parallelism and asynchrony

In this chapter we’ll use a few, closely related but distinct concepts. Let’s go through each of them in turn.

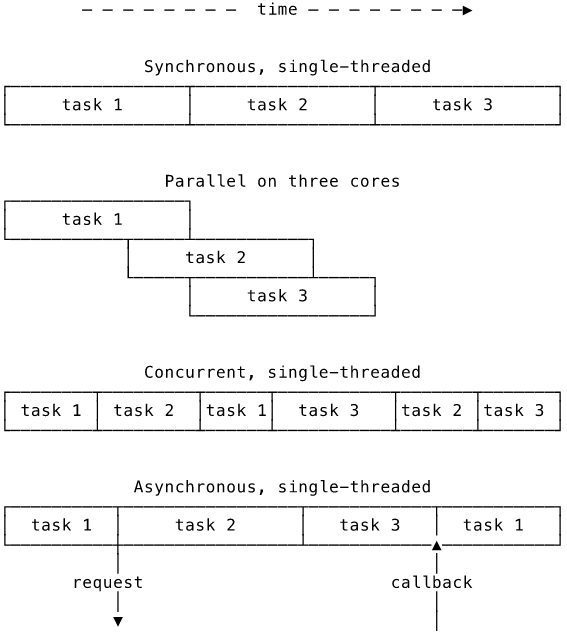

Concurrency means dealing with multiple things at once. A program is concurrent if it is working on multiple tasks at the same time. That doesn’t mean that it has to be literally performing multiple tasks at the same time. Cast your mind back to the multi-tasking scheduler we saw in the OS chapter. On a single-threaded processor, there is only ever one process actually executing at any given time, but the scheduler manages to deal with multiple tasks by giving each slices of processor time. The concurrent behaviour observed by the user is created by the scheduler in software.

Parallelism means actually doing multiple things at once. Parallelism requires hardware support. A single-threaded processor physically cannot execute multiple instructions simultaneously. Either hardware threads or multiple cores are necessary. A problem is parallelisable if it can be solved by breaking it into sub-problems and solving them in parallel. Counting votes in an election is a parallelisable task: count the votes in each constituency in parallel and then sum the totals to find the national result. Parallelisable tasks are desirable in computing because they can easily make use of all available cores.

To take an example, you are reading this book. We can think of each chapter as a separate task. You might choose to read the book from cover to cover (good for you!), one chapter after another. That is analogous to a processor executing tasks sequentially. Alternatively, you might start one chapter and, midway through, follow a reference to a section in another chapter. You are now reading two chapters concurrently. You are literally reading only one chapter at any given moment, of course, but you are in the process of reading two chapters. To read two chapters in parallel, however, you would need to have two heads and two books!

Make sure that you grasp the distinction between concurrency and parallelism. Concurrency is the slightly vague idea of having multiple, unfinished tasks in progress at the same time. It comes from the behaviour of software. Parallelism means doing multiple things literally at the same time and requires hardware support.

The third concept is asynchrony. In synchronous execution, each section of code is executed to completion before control moves to the next. A program that initiates a system operation (e.g. reading a file) will wait for the operation to complete before proceeding. We say that the operation is blocking because the program is blocked from progressing until the operation completes. In asynchronous execution, the program will initiate the operation and move on to other tasks while it waits for the operation to complete. The operation is therefore non-blocking. In JavaScript, an asynchronous language, to perform an operation you must request it from the runtime environment and provide a callback function for the runtime to execute when the request completes. Asynchronous execution is therefore a form of concurrency.

We saw in the scheduling section of the OS chapter that threads can be either CPU-bound, meaning they need to do work on the processor, or I/O-bound, meaning they are waiting for an I/O operation e.g. reading a value from memory or sending a file over a network. When a thread makes an I/O request via a system call, the scheduler marks the thread as blocked pending request completion and schedules something else. The problem is that one blocked thread is enough to block an entire process, even though other threads within the process might still have available work to do.

The aim of concurrent programming is to multiplex multiple tasks on to a single thread. From the scheduler’s perspective, the thread will seem completely CPU-bound and so won’t be suspended. There are a few things that need to be in place for this to work. The operating system will need to provide non-blocking versions of system calls. The program will need to somehow manage scheduling its own tasks. This is difficult to get right and so it is normal to rely on a library or a language-specific runtime that takes care of all the scheduling. When I refer to “scheduler” from now on, I mean this scheduler internal to the program, rather than the OS scheduler, unless I specify otherwise.

Determinacy and state

Concurrency seems pretty cool. We can write code that handles multiple things at once and we don’t have to worry about anything blocking the whole program. What’s not to like?

The problem is non-determinacy. In a synchronous program, we know the order in which tasks execute and so we can predict how they will affect the system. For example, function A writes a value to memory and then function B reads that value. The code generates the same output every time it is called. In a concurrent program, the executing tasks are arbitrarily interleaved by the scheduler. It is entirely possible that function B will run first and read the value before function A writes it, thus generating a different output. Worse, that will only happen sometimes: the scheduler can choose a different ordering every time. The upshot is that the program’s output may vary depending on how its tasks have been scheduled. This is non-determinism. It is very difficult to reason about code and have assurances about its correctness in the presence of non-determinism. How can you reliably test code that breaks only rarely? Perhaps when you write and test the code, the scheduler consistently chooses an order that functions correctly. Then, weeks from now, for whatever reason, the scheduler chooses an ordering that breaks your code and everything blows up in production.

The root cause of the problem with functions A and B above is not so much the random interleaving but the fact that the two functions have shared, mutable state. I say “shared” because they both have read access to the same memory addresses and “mutable” because they both can modify the data in those addresses. If there were no shared, mutable state between the two functions, it wouldn’t really matter in which order they were executed.

A classic example of the problems in shared, mutable state is a broken counter implemented using two threads (in Java-like pseudo-code):

1 function main() {

2 Thread[] threads = new Thread[2];

3 int count = 0;

4

5 for (thread : threads) {

6 thread.run() {

7 for (int i = 0; i < 10000; i++) {

8 count++;

9 }

10 }

11 }

12

13 System.out.println("Got count " + count + ", expected " + (2 * 10000));

14 }

In this code, we start two threads, each incrementing count 10,000 times. You might think that this is a faster way to count to 20,000 because the work is split between two threads. In fact, on a system with multiple hardware threads or cores, the program doesn’t even work properly and count will be lower than expected. Its exact value will vary each time the program runs, due to non-determinacy.

Each thread has to read count, increment its value and write the modification back to the variable. The problem is that both threads are trying to do that at the same time. Here’s an example of how things will go wrong. Thread one starts and reads the initial value. Depending on the scheduler’s mood, there is a chance that it will suspend thread one and schedule thread two. The second thread will run for a while, incrementing count by some amount. The scheduler then swaps the threads and thread one picks up from where it left off. Crucially, thread one will still hold the same value it had for count when it was first suspended. It will be completely unaware of the changes made by thread two and so its modifications will overwrite thread two’s work. This happens repeatedly, leaving count’s final value far off the expected 20,000.

When multiple threads compete to update shared, mutable state, we have what is know as a data race. More generally, competition for access to any resource is known as a race condition. The observed outcome depends on which thread wins the “race”, as determined by the order the scheduler chooses to run them in. This is the source of the observed non-determinism and a relatively funny joke (by computing joke standards):

What do we want?

- Now!

When do we want it?

- No race conditions!

Race conditions occur when two or more threads simultaneously execute a critical section of code. Only one thread may be in the critical section at any time. The critical section in the example above is count++, which is just a single line of code but actually involves reading the current value, incrementing it and then writing it back out. The way to achieve safe, concurrent code without race conditions and non-determinism is to control, or synchronise, access to critical sections to prevent multiple threads entering them at the same time. All of the concurrency models in this chapter solve this problem in different ways.

Threads and locks

The simplest concurrency approach a language can take is to only support the basic concurrency primitives provided by the underlying operating system. If the programmer wants to do anything fancy, they’ll have to build it themselves (or find a library implementation). In Ruby, for example, a network request will block by default:

1 response = Net::HTTP.get('example.com', '/index.html')

2 # execution waits until response is ready

If you want concurrency within a Ruby program, you need to create threads. Remember that threads operate within the same process’s address space. If a program creates three threads, it will have a total of four (including the original process thread) independently executing threads, all jumping around the address space and changing things as they please. Since they share the same address space, a change made by one thread will be immediately visible to the others: shared, mutable state.

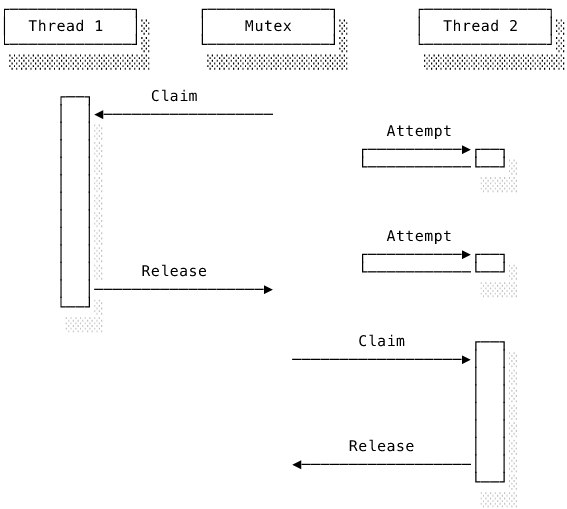

Operating systems provide a very simple synchronisation mechanism known as the lock. If you want to make something inaccessible, you lock it away. A computing lock is no different. A lock is a record that a particular thread has exclusive access to a particular resource. A thread claims a resource by recording that it is using the resource. It does its work and, when finished, releases the lock by erasing the record. A second thread coming along and trying to access the resource must wait for the lock to be released before it can claim the lock for itself. By requiring that a thread claim a particular lock before entering a critical section and releasing the lock when it leaves, we ensure that only one thread is ever in the critical section. Such locks are also known as mutexes (from “mutual exclusion”).

Note that thread two is blocked when it cannot claim the lock. Mutual exclusion means that accesses to the resource are now performed synchronously. The synchronisation solves our race condition problem but removes the concurrency, which was the whole purpose in the first place. Achieving the optimal balance of correctness and concurrency via locks requires choosing the correct granularity. Ideally, we want to keep critical sections as small as possible while still preventing non-determinism.

More sophisticated locks allow for more precise access control. Threads needing only to read a value can each claim read-only, shared locks. Should another thread need to modify the value, it can claim an exclusive lock but only when there are no shared locks. Databases frequently use such mechanisms to coordinate concurrent reads and writes. A problem here is when there are so many reads that there is always at least one shared lock in place and so a modifying thread is starved and can never claim its lock.

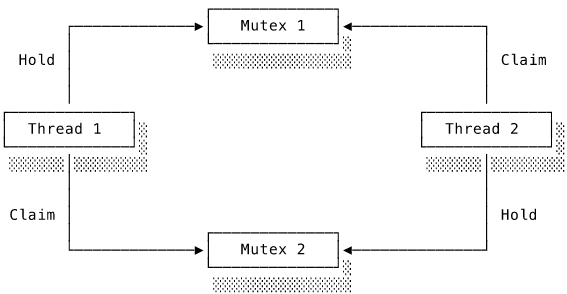

Still worse, using locks on multiple resources can lead to a situation known as deadlock. This occurs when two threads have each claimed one lock and are waiting for the other thread to release the other lock. Both threads are stuck in an infinite loop.

To avoid deadlocks, locks must always be claimed and released in the same order. That sounds straightforward but is no simple task in a complex application. Many very competent developers have horrendous stories of days spent tracking down very subtle bugs caused by incorrect lock usage. I think part of the problem is that you have to read code in a very different way. Look at the example below (again in Java-like pseudo-code):

1 function transfer(Account sender, Account receiver, uint amount) {

2 claimLock(sender) {

3 claimLock(receiver) {

4 sender.debit(amount);

5 receiver.credit(amount);

6 }

7 }

8 }

9

10 Thread1.run() { transfer(a, b, 50); }

11 Thread2.run() { transfer(b, a, 10); }

At first glance, this seems fine because we consistently claim first the sender lock and then the receiver lock before performing the transfer. When reading the code, however, try to imagine the various ways the two threads might be interleaved. One possible ordering is where Thread1 claims a lock on a and then Thread2 is scheduled and claims a lock on b, resulting in deadlock because neither thread can claim the second lock. In this example, it’s fairly easy to spot how the lock orderings are reversed Now imagine that the two calls to transfer are in completely different areas of the codebase and only occasionally run at the same time. The potential for deadlock would not be at all obvious.

When reading code, it’s natural to make a sequential, synchronous mental thread of execution. When dealing with multiple threads, you have to remember that between any two operations the thread may have been paused and another scheduled thread might have changed any of the shared, mutable state. A value that was true in the conditional of an if statement might have become false by the time the block of the statement is executed. This makes multi-threaded programming using locks deceptively difficult to do correctly. Operating systems and databases use locks extensively to implement very sophisticated concurrent systems, but they are notorious for having very difficult to understand sections of code. For mortal developers who don’t write operating systems and databases on a regular basis, it is better to think of locks as the building blocks from which easier to use concurrency models are constructed. In the next two sections, we’ll examine the concurrency models provided by JavaScript and Go.

JavaScript and the event loop

JavaScript was originally designed to run in web browsers. The browser is a perfect example of a highly concurrent application. At any one moment, it might have to parse HTML, dispatch events, respond to a network request, react to user input and more. Many of those tasks will require some JavaScript execution. For example, when the browser detects that the user has clicked on a DOM element, it must dispatch an event to any registered JavaScript event listeners. JavaScript execution is organised around a simple design pattern known as the event loop, which is frequently found in event-driven programs. In an event loop, a main thread continually checks for and handles incoming events or tasks. Other threads generate events to be processed by the main loop.

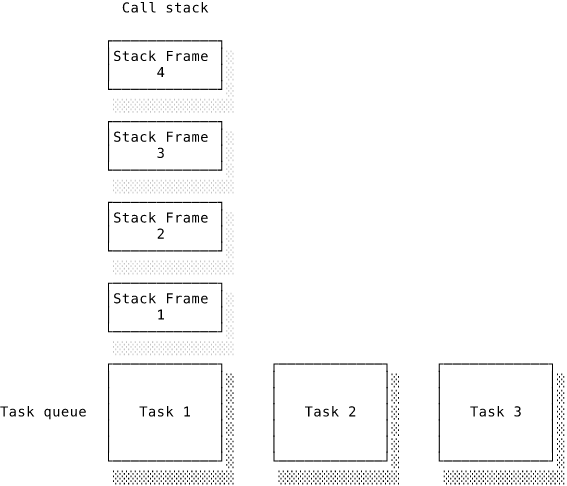

Every browser has a JavaScript engine, or runtime, which is in charge of running JavaScript code. In the case of Chrome, the engine is called V8 (also used by node.js). Whenever some JavaScript needs to be executed, it is bundled into a task. The engine maintains a queue of pending tasks known as the task queue. The engine runs a loop, continually pulling tasks from the head of the task queue and passing them to the JavaScript thread for execution. A separate structure, known as the call stack, tracks the execution of the current task. There is only one thread consuming the queue, so there is only one call stack and the engine can only execute one JavaScript task at once. The call stack operates just like the process’s stack managed by the OS. The engine pushes and pops stack frames to and from the call stack as the JavaScript thread calls and returns from functions in the task. When the call stack is empty, the task is complete and the engine moves on to the next task.

The engine uses only a single JavaScript thread to execute the tasks sequentially. This single-threaded form of concurrency avoids all of the nastiness of shared, mutable state. Programming in JavaScript is therefore significantly easier than programming in a multi-threaded environment, although getting used to callbacks and asynchronous code does take some effort.

You might be wondering at this point: “how can JavaScript be concurrent and non-blocking if it only has a single thread?”. While there is only one thread executing JavaScript, the engine will have other, background threads performing network requests, parsing HTML parsing, responding to user input and so on. The JavaScript execution model relies heavily on the multi-threaded engine to handle all of the tricky, asynchronous stuff by processing operations concurrently and maintaining the task queue.

Asynchronous, non-blocking I/O is simple to implement in this model. When JavaScript triggers an I/O operation, it provides the engine with a callback function to be executed when the operation completes. The engine uses a background thread to request the operation from the OS. While that thread waits for the OS to provide the response, the JavaScript thread continues on its merry way working through the task queue. When the OS completes the I/O request, it wakes the requesting thread. That thread creates and pushes to the queue a new task in which the callback receives the I/O response as an argument. Eventually, that task reaches the head of the queue and is executed.

The downside is that the programmer has very limited control over which tasks are executed in which order. There is no way to suspend the correct task or reorder pending tasks. Since there’s only a single JavaScript thread, a computationally intensive task that takes a long time to complete will block subsequent tasks. You might see JavaScript mistakenly described as non-blocking in general. That is incorrect. It only offers non-blocking I/O. A node.js server, for instance, can easily become unresponsive by performing a computationally intensive task in a request handler. Since browsers usually do rendering updates after each task completes, slow tasks can even freeze web pages by blocking UI updates. Just try running the following in your browser console:

1 function blocker() {

2 while(true) {

3 console.log("hello")

4 }

5 }

6 blocker()

This loop runs synchronously and blocks the event loop. The current task only finishes when the call stack is empty, but this script will never have an empty call stack because it contains an infinite loop. Eventually, Firefox has had enough and asks me to terminate the script. Thus, the first rule of writing performant JavaScript is: don’t block the event loop.

Though simpler than multi-threaded code, JavaScript’s asynchronous style still takes some getting used to. It can be hard to work out in what order things will happen if you aren’t familiar with the event loop, but there are actually only a few simple rules. For example:

1 function example() {

2 console.log("1");

3

4 window.setTimeout(() => console.log("2)", 0);

5

6 console.log("3");

7 }

8

9 example();

What do you think the output of this example will be? The JavaScript engine starts off executing the top level in the first task. It defines the example function and executes it at line nine, creating a stack frame in the process. JavaScript requests that the engine perform the console.log("1") operation, which it does synchronously (without enqueuing a task) by outputting 1 to the console. We are still within the first task. Next, JavaScript requests that the engine starts a timer for 0 milliseconds and provides it a callback for when the timer is ready. The timer ends immediately and the runtime enqueues a new task with the callback. However, the first task has not finished yet! While the runtime is dealing with the timer, the JavaScript thread makes the second console.log request. It then returns from example, removing its stack frame. The call stack is now empty and the first task is complete. Assuming there are no other tasks, the JavaScript thread executes the callback task and requests the final console.log. The output is therefore:

1 1

2 3

3 2

Using window.setTimeout(callback, delay) with no delay value (or zero) doesn’t execute the callback immediately, as you might expect. It tells the runtime to immediately enqueue the callback task. Any tasks already queued will be executed before the timeout callback. Since JavaScript can only ever see the head of the queue, we have no idea how many tasks are already queued or how long it’ll take the callback task to reach the head of the queue. This makes JavaScript a real pain to use for anything requiring very precise timing.

The picture is further complicated by micro-tasks (also known as jobs). These slippery little creatures are like high priority tasks. They go in a separate queue that is processed after callbacks and between tasks. Promises and their handlers, which we’ll look at in more detail below, are good examples of micro-tasks. In this example, what do you think the output will be?

1 function example() {

2 console.log("1");

3

4 window.setTimeout(() => console.log("2"));

5 Promise.resolve().then(() => console.log("promise"));

6

7 console.log("3");

8 }

9

10 example();

We know that specifying no timeout value for window.setTimeout enqueues the callback. The promise callback is also enqueued, but because promise handlers are micro-tasks, it wriggles in ahead of the timeout callback:

1 1

2 3

3 promise

4 2

Treating micro-tasks in this way improves the responsiveness of the system. The whole point of promise callbacks is that they are executed as soon as possible after the promise resolves, so it makes sense to give them priority. Sending them to the back of the task queue could cause an unacceptably long delay.

To sum up, JavaScript’s concurrency model provides single-threaded, asynchronous execution with non-blocking I/O. It relies heavily on the JavaScript engine to process operations concurrently and enqueue tasks. The JavaScript thread only sees a sequence of discrete tasks with no shared, mutable state. The programmer doesn’t have to worry about data races or deadlocks, but in exchange they give up a lot of control. JavaScript is not particularly well-suited to computationally intensive work because slow running tasks block the event loop until they are complete. It is better suited to I/O-bound work, such as servers. A node.js server can easily handle thousands of concurrent requests in a single thread, all thanks to the event loop.

Managing asynchronous execution

Now that we understand how the underlying runtime works, let’s look at how a JavaScript programmer can structure their asynchronous code. The most simple approach, as already seen above, is to provide a function that the runtime should use to call back into JavaScript when the operation completes. Callbacks appear throughout the browser APIs (e.g. setTimeout, addEventListener) but became ubiquitous in node.js. The thing to remember about callbacks is that the callback function executes later, when the response is ready. Performing a sequence of asynchronous operations therefore requires nested callbacks, affectionately known as callback hell:

1 function handleRequest(req, callback) {

2 validateRequest(req, (error, params) => {

3 if (error) return callback(error);

4 fetchFromDB(params, (error, results) => {

5 if (error) return callback(error);

6 generateResponse(results, callback);

7 });

8 });

9 }

10 handleRequest(req, (error, response) => {

11 if (error) {

12 console.error(error);

13 }

14 console.log(response);

15 });

There are various techniques to make it look a little better, but you can’t get away from the fundamental weirdness that the program’s control flows through the callbacks. The nature of asynchronous code is that a variable’s value depends on when the current scope is executing. An operation’s result only exists in the scope of its callback. That makes sense because, as we’ve just seen, the callback task is only created when the operation completes. We must be careful to structure our code so that tasks needing the result are only scheduled when the result is available. That’s why, in the example above, we have to call fetchFromDB from validateRequest’s callback: it’s only in that scope that the necessary params exists.

Enter the promise. A promise (also known as a future in other languages) is a value representing some asynchronous operation. While the operation is pending, the promise has an ambiguous value. At some future point, when the operation completes, the promise’s value resolves into the result of the computation (or an error in case of failure). Since promises are values just like any other JavaScript value, they can be stored in data structures and passed to and from functions. We can register handlers to execute when the promise resolves by calling .then(), which returns the same promise to allow for chaining:

1 function handleRequest(req) {

2 return validateRequest(req)

3 .then(fetchFromDb)

4 .then(generateResponse);

5 }

6

7 handleRequest(req)

8 .then(response => console.log(response)

9 .catch(err => console.error(err));

Promises introduce the concept of an asynchronous value. Notice that handleRequest above returns a promise. That’s more meaningful than returning undefined and having to pass in a callback to access the result. Yet promises are no panacea. Asynchronous operations have to be sequenced as a chain of .then() calls, which are subjectively ugly and objectively very different to standard, synchronous code. Worse, if an error occurs in a promise it is rejected, requiring the .catch() handler at the bottom of the chain. This is different to the normal try / catch error handling in JavaScript and can create subtle bugs if you don’t handle everything properly. We still have to jump through all these syntactic hoops to get things working as we want. Wouldn’t it be better if we could write asynchronous code in the same way we write synchronous code?

So, just after everyone rewrote their callback-based code to use promises (which arrived officially with ES6 JavaScript), the JavaScript community decided that promises were unacceptable after all. A new challenger arrived with ES2017: async/await. The idea is that we tag asynchronous functions with the async keyword. We call them and assign their return values as normal on the understanding that the return value may not actually exist until later. Under the hood, async functions actually return promises. The await keyword creates a synchronisation point by which the promise must be resolved. If the promise has not yet resolved, the task will suspend until the value is available. We can write asynchronous code that looks almost like synchronous code. The main difference is that we must remember to await the return value when we want the resolved value:

1 async function handleRequest(req) {

2 const params = await validateRequest(req);

3 const dbResult = await fetchFromDb(params);

4 const response = await generateResponse(dbResult);

5 console.log(response);

6 return response;

7 }

It’s much easier to see here the sequence of actions than in either the callback or promise examples. Since async/await are syntactic sugar around promises, we can use them directly on promises too. That might help clarify what they are doing. The following async function awaits a promise that resolves after a timeout in order to emulate a slow, asynchronous operation (e.g. a database query):

1 async function slowFetchId() {

2 await new Promise(resolve => setTimeout(resolve, 5000));

3 return 5

4 }

Here we call slowFetchId and assign its return value to id. We log what it contains before and after we await the result:

1 async function example() {

2 var id = slowFetchId()

3 console.log("first id:", id)

4 await id

5 console.log("second id:", id)

6 }

7

8 // Output

9 > first id: Promise {<pending>}

10 // Five seconds later...

11 > second id: Promise {<resolved>: 5}

Since slowFetchId is async, it immediately returns a pending promise. Note that the returned promise represents the whole function example and so it actually contains the separate promise defined within example. When we want the actual value from the promise, we use await to suspend the current task execution until id has settled by resolving into a value or rejecting with an error. slowFetchId is itself waiting on the timeout promise, so it can’t do anything until the runtime triggers the timeout callback, which enqueues a micro-task to resolve the promise and allows slowFetchId to return 5. The promise held in id can now resolve and execution moves on to the final logging statement.

The main limitation of async/await is that any function calling an async function also needs to be marked as async. Making just one function async might cause a whole cascade of tedious refactoring. You might see this referred to as having to “colour” a function. The terminology comes from an influential blog post on the topic, which I have included in the further reading. While not perfect, async/await is a big improvement. There was a dark period when callback hell coexisted with various, mutually incompatible promise libraries. Best practices seemed to change from day to day. Thanks to the combination of built-in promises and async/await, things are now settling down. Using promises, we can express the concept of an asynchronous value and with async/await, we can express asynchronous operations in a clean and consistent manner.

Communicating sequential processes in Go

You might expect Ryan Dahl, the creator of node.js, to be bullish about it as a server platform. In fact, he says:

I think Node is not the best system to build a massive server web. I would use Go for that. And honestly, that’s the reason why I left Node. It was the realisation that: oh, actually, this is not the best server-side system ever.

What does Go offer that node.js doesn’t? Recall that JavaScript (and hence node.js) uses a single-threaded, asynchronous concurrency model. That doesn’t scale to multiple cores. What if we assigned each task to its own thread and relied on the operating system to schedule them on the available cores? Multi-threading, as it is known, is certainly a valid approach. The problem is that context switching between threads requires switching into kernel mode and flushing caches. The operating system doesn’t know how much state can be safely shared between threads so it has to be conservative and clear everything. The performance overhead will be too much for many use cases. If the program implemented its own version of threads in userspace, it could avoid the expensive context switches into kernel mode and reduce the cost of switching.

Concurrency in Go is implemented via goroutines: lightweight, userspace threads created and managed by the Go runtime. A single Go process might contain thousands or even millions of goroutines. Switching between goroutines is much faster than switching between OS threads and is not much slower than a simple function call. To achieve concurrency, a Go programmer simply has to create a goroutine for each concurrent task. If there is suitable hardware support, the Go runtime will even execute them in parallel with no additional effort required from the programmer.

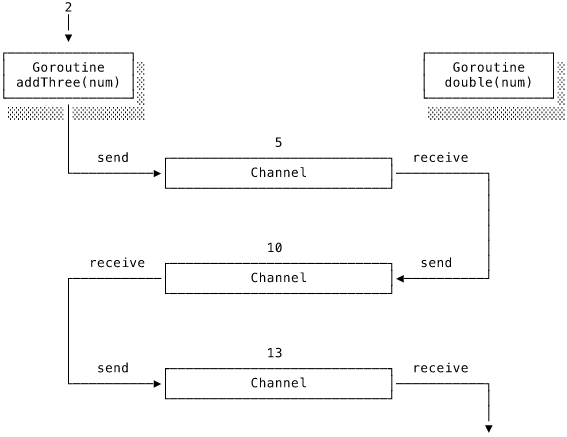

We need some way for the goroutines to share data safely. Modern JavaScript uses promises to represent a value that might change in the future. This mutable state is not shared because JavaScript is single-threaded. Go takes an entirely different approach. We can create communication pipes between goroutines known as channels. Rather than communicating by sharing mutable state, goroutines share data by communicating through channels. They are first class objects in Go, meaning that we can store them in variables and pass them to functions just like any other value.

By default, channels are unbuffered, meaning that they can only hold a single value. Reading from an empty channel and writing to a full channel will both block the current goroutine. That gives another goroutine an opportunity to fill or empty the channel as appropriate. Channel operations therefore naturally create synchronisation points in the code. The select block is a bit of syntax that allows a goroutine to wait on multiple channel operations. In Go, we use channels and select blocks to express the logical dependencies between concurrent goroutines and leave the scheduler to work out the details.

None of these concepts are particularly unique or innovative. The strength of Go’s concurrency model comes from being a simple, well-engineered combination of all three. The theoretical underpinning is known as communicating sequential processes (CSP) and was first developed by C.A.R. Hoare back in 1978 (see further reading).

Scheduling goroutines

Implementing its own form of userspace threads means that the Go runtime has to implement its own userspace scheduler. The OS scheduler plays no part in scheduling goroutines. It only sees the threads of the Go runtime itself. It’s up to the Go runtime to efficiently schedule concurrent goroutines. The Go runtime scheduler tries to avoid the OS scheduler suspending its threads by schedule goroutines so that those threads are continually busy. If the runtime’s threads run out of work or become blocked, the OS scheduler might suspend the entire Go runtime process, halting all goroutines. The OS scheduler can’t see that some other goroutines might have been able to continue doing useful work.

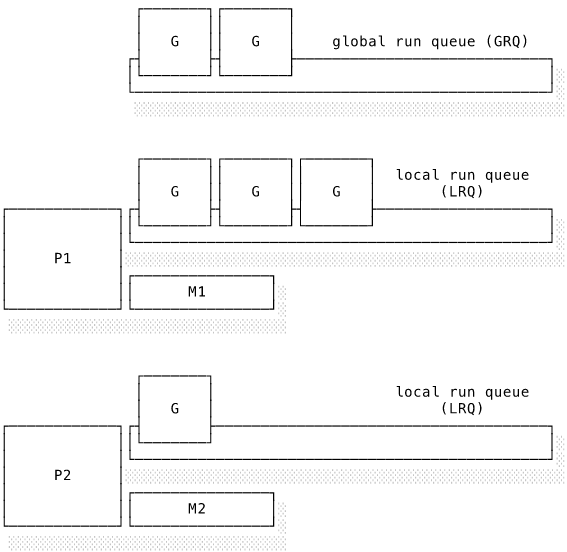

Let’s look at the Go scheduler in more detail. The scheduler creates a logical processor (P) for each virtual core (that is, counting each hardware thread in each physical core). Each P is assigned a physical thread called a machine (M). The OS schedules the M threads according to its own rules. Goroutines (G) are created when the program starts and by using the special go keyword. Each goroutine is scheduled on to an M. Each P has a local run queue (LRQ) of goroutines assigned to it. The logical processor P runs the goroutines from the LRQ on the machine M. There is a separate global run queue (GRQ) for goroutines that aren’t yet allocated a logical processor. It all looks like this:

Goroutines can be in one of three states with fairly self-explanatory names: waiting when it needs some resource in order to continue, runnable when it’s ready to go and executing when it’s actually running on a machine. The scheduler is cooperative because goroutines voluntarily relinquish the machine at defined points, such as system calls and when the go keyword is used. The scheduler doesn’t pre-emptively suspend goroutines as the OS scheduler would do with processes. The Go scheduler implements a work-stealing algorithm: when a logical processor empties its LRQ, it will look for pending work in the global queue or steal pending goroutines from another logical processor. It’s like an annoyingly high achieving colleague who finishes all their work and starts doing some of your pending tickets to fill their time.

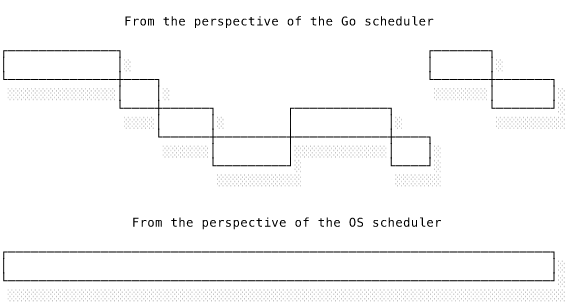

The justification for userspace threads is that switching between them is much faster than switching between OS threads. The Go scheduler takes a set of CPU-bound and I/O-bound tasks and carefully schedules them so that they appear to be one, unbroken, CPU-bound task to the OS scheduler. If the Go runtime is limited to only use a single physical core, things work in a similar way to JavaScript. But unlike JavaScript, Go can easily take advantage of as many cores as the hardware has available. True parallelism is thus a possibility. Running multiple userspace threads over a single OS thread is known as M:N threading, where N goroutines are spread over N system threads.

A disadvantage of goroutines, and userspace threading in general, is that each goroutine requires at least a few kilobytes of memory to store its stack. This is normally inconsequential but something to bear in mind if your application is going to create huge numbers of goroutines. The Go runtime starts each goroutine off with 2Kb of stack space. If the goroutine calls lots of functions and is in danger of running out of stack space, the runtime handles this by allocating a bigger block of memory and copying the entire stack over to the new location. It’s a bit like how dynamic arrays automatically reallocate when they reach their capacity. Moving the stack entails rewriting the address of every variable on the stack. Go is only able to do this because it tracks every use of a variable’s address and so can rewrite the addresses as necessary.

Working with goroutines

We can rely on the blocking nature of channel operations to automatically create synchronisation points in our code. The runtime will detect when a goroutine is blocked and switch to another runnable goroutine. Eventually, assuming no deadlocks, a goroutine will run that unblocks the first and progress continues. We can write our code in a straightforward, declarative style without worrying about sequencing operations in the correct order. The scheduler takes care of that. There’s therefore very little distinction between synchronous and asynchronous Go code. A direct function call occurs synchronously in the caller’s goroutine. Calling a function with the go keyword creates a new, asynchronous goroutine.

Let’s look at how to use channels to synchronise goroutines. In this example, we offload a slow computation to a separate goroutine:

1 package main

2

3 import (

4 "fmt"

5 "time"

6 )

7

8 func slowCompute(a, b int) int {

9 time.Sleep(2 * time.Second)

10 result := a * b

11 fmt.Printf("Calculated result: %d\n", result)

12 return result

13 }

14

15 func main() {

16 go slowCompute(5, 10)

17 fmt.Println("Doing other work...")

18 }

This doesn’t work as intended:

1 Doing other work...

The program doesn’t wait for slowCompute before exiting! The problem is that the main goroutine has not been instructed to wait for slowCompute. What are we missing? Synchronisation! We fix this by having slowCompute write to a channel and main read from it. As we know, a read operation on an empty channel will block until a value is sent:

1 package main

2

3 import (

4 "fmt"

5 "time"

6 )

7

8 func slowCompute(a, b int, result chan<- int) {

9 time.Sleep(2 * time.Second)

10 result <- a * b

11 }

12

13 func main() {

14 result := make(chan int)

15 go slowCompute(5, 10, result)

16 fmt.Println("Doing other work...")

17 fmt.Printf("Calculated result: %d\n", <-result)

18 }

The <-result syntax means “read from result” and result <- means “write to result”. This works as expected:

1 Doing other work...

2 Calculated result: 50 // two seconds later

Channels just seem to be a roundabout way of implementing JavaScript’s async/await, right? Not so! Channels permit more complex patterns. It is trivial to implement task queues, worker pools and other concurrent patterns using channels. Here’s an example of distributing work over a pool of worker goroutines:

1 import (

2 "fmt"

3 "sync"

4 "time"

5 )

6

7 func worker(id int, jobs <-chan int, results chan<- int, wg *sync.WaitGroup) {

8 fmt.Printf("Starting worker %d\n", id)

9 for job := range jobs {

10 fmt.Printf("Worker %d performing job %d\n", id, job)

11 time.Sleep(2 * time.Millisecond)

12 results <- job * 2

13 }

14 wg.Done()

15 }

16 func main() {

17 var wg sync.WaitGroup

18 jobs := make(chan int, 100)

19 results := make(chan int, 100)

20

21 for w := 1; w <= 4; w++ {

22 wg.Add(1)

23 go worker(w, jobs, results, &wg)

24 }

25

26 for jobID := 1; jobID <= 20; jobID++ {

27 jobs <- jobID

28 }

29 close(jobs)

30

31 wg.Wait()

32 close(results)

33 }

There’s lots of interesting things going on here! We create two channels: jobs and results. Both have a buffer size of 100. We start up four workers in separate goroutines. We then write twenty jobs to the jobs channel. The workers pull jobs from the channel using the range keyword. The workers sleep briefly during processing to simulate performing a time consuming task. Once we’ve put our jobs into the channel, we close it to indicate that no more values will be written. That stops the workers ranging infinitely over the channel, forever waiting for values that never arrive. A waitGroup is a counter that’s incremented every time we call Add(1) and decremented every time we call Done(). wg.Wait() blocks the main goroutine until the counter is zero. In this case, that means that every worker has finished and all of the work is complete. The output will look something like this, though the exact ordering is non-deterministic and depends on how the scheduler chooses to run things:

1 Starting worker 4

2 Worker 4 performing job 1

3 Starting worker 1

4 Worker 1 performing job 2

5 Starting worker 2

6 Worker 2 performing job 3

7 Starting worker 3

8 Worker 3 performing job 4

9 Worker 1 performing job 5

10 Worker 3 performing job 6

11 // ...

Go makes it easier to sequence concurrent operations and to reason about how control flows through a concurrent application. Nevertheless, writing correct concurrent code still requires some care. Here is a trivial example that deadlocks:

1 package main

2

3 import "fmt"

4

5 func work(channel chan int) {

6 channel <- 1

7 }

8

9 func main() {

10 channel := make(chan int)

11 go work(channel)

12 select {

13 case value := <-channel:

14 fmt.Printf("%d\n", value)

15 }

16 channel <- 2

17 close(channel)

18 }

We start off a worker in a separate goroutine and then block until a value is available using select. That will work. Trying to send the second value to channel is what causes the deadlock. Since channel is unbuffered, the write will block the main goroutine until something reads it. That gives the scheduler a chance to run other goroutines and hopefully one of them will read from the channel. However, in this instance there is no other goroutine trying to read from the channel and so the program blocks forever – deadlock! Admittedly, the cause of the deadlock in this contrived example is pretty obvious but it’s surprisingly easy to cause subtle deadlocks in more complex code. On the plus side, the Go compiler includes a deadlock detector that can often find deadlocks and even show the faulty line of code.

Conclusion

We live in interesting, concurrent times. In this chapter, we saw that changes in computer architecture are driving changes in how we write software. Programs need to be able to take advantage of multiple processor cores. Concurrent programming involves writing code that can deal with multiple things at once. Parallelism means literally performing multiple operations at once and requires hardware support. Asynchronous programming means writing code that does not block while waiting for operations to complete. All of them require thinking about programming in a different way.

Multiple threads running in the same address space create the problem of shared, mutable state leading to non-determinacy. One simple solution, the lock, is deceptively difficult to use correctly and safely. Concurrent programming models provide mechanisms to manage shared, mutable state that are easier to reason about. JavaScript’s single-threaded, asynchronous model relies on the language runtime to provide concurrency, greatly simplifying things for the programmer. The event loop approach is simple to understand but easy to block and has no scope for parallelism. JavaScript has struggled to provide effective means to coordinate asynchronous operations, though async/await is a big step forward. Go implements userspace threading in the form of goroutines. It avoids data races by communicating state between goroutines via channels. Go programs scale easily to multiple cores with no changes in syntax. The downside is the implementation cost of a userspace scheduler, the cumulative memory cost of many goroutines and the risk of deadlocks.

Further reading

Many of the textbooks cited in the operating systems chapter include thorough treatments of the concurrency primitives provided by operating systems. If you haven’t already read Operating Systems: Three Easy Pieces, now would be a good time to start. Herb Sutter’s article The Free Lunch is Over: A Fundamental Turn Toward Concurrency in Software is a little dated now but provides some useful background context on the hardware changes driving developments in concurrent programming.

When those textbooks warn gravely of how threads and locks lead to dreadful concurrency bugs, it’s sometimes difficult to grasp just how subtle the bugs can be. Deadlock Empire is a fun, little game that challenges you to find and exploit concurrency bugs. You must slay an evil dragon that, fortunately for us, uses locking mechanisms to protect itself. The simple fact that finding concurrency bugs in lock-based code can be made into a mind-bending puzzle game illustrates just how hard it is to write correct code with locks.

If you would like to know more about the basis for Go’s concurrency model, I recommend the original paper: Communicating Sequential Processes by C.A.R. Hoare. It’s easy to find online and you can even find the problems from the paper solved in Go in this package.

Seven Concurrency Models in Seven Weeks by Paul Butcher provides a pacey introduction to a range of concurrency models implemented in a few languages (including Java, Erlang and Clojure). I recommend it both as a good overview of the problem space and as a window into some environments you might not yet have come across (particularly Erlang).

At the risk of stating the obvious, the best thing you can do to improve your understanding of concurrent programming is to write code using different concurrency models. If you know JavaScript, you’re already writing concurrent code, but have you tried the full range of tools at your disposal? Try implementing the same functionality using callbacks, promises and async/await. Which is easier and most ergonomic? What problems do you encounter? (What colour is your function? highlights some of the issues with async/await.) Learning Go, Erlang, Elixir or any language with a distinctive concurrency model is a powerful way to make yourself rethink how programs are executed and to develop a more sophisticated mental model.